The AI will see you now: How the artificial intelligence used in recruitment tools could leave disabled people out of the running, posing a risk to workplace equality

By Sunita Ghosh Dastidar @sunitadastidar

AI-powered interviews

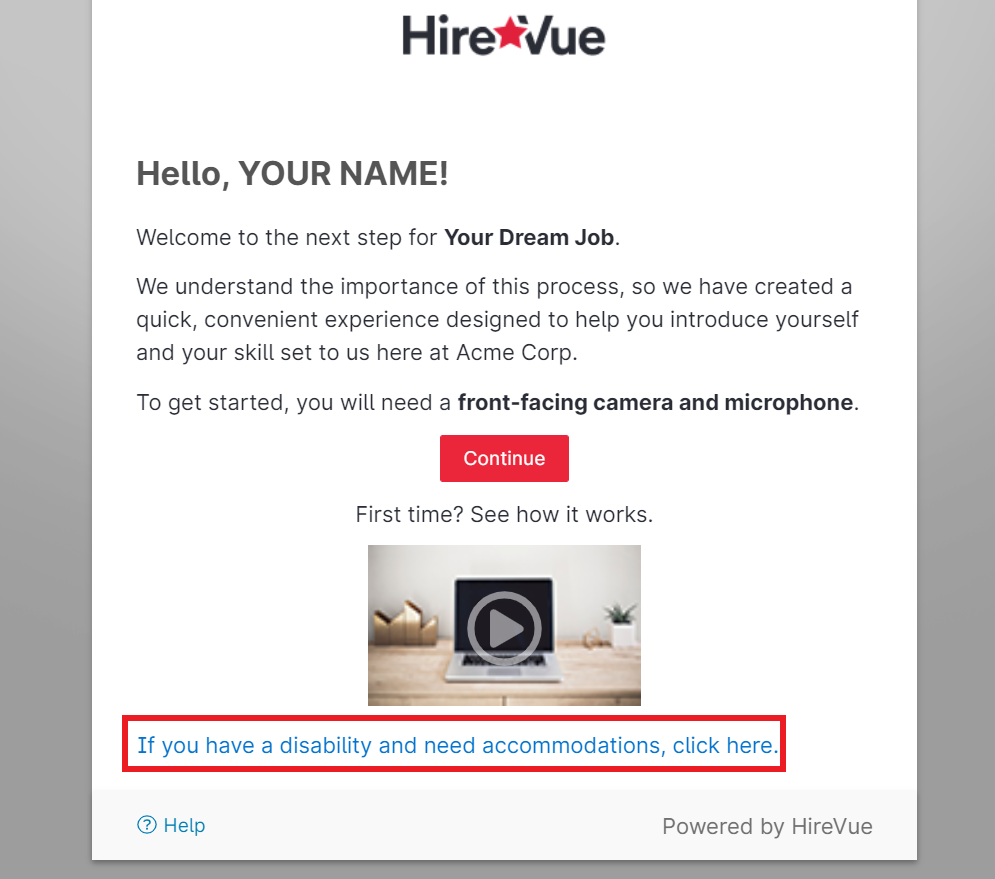

Anna* stared at her laptop screen, waiting for her automated one-way video job interview to begin. When she signed on, she was prompted to disclose her disability to receive accommodations during the hiring process. This poses a dilemma for applicants with disabilities.

“I’m scared that disclosing my disability will mean that I won’t be given the job,”says Anna, who has Autism. “But if I don’t click on it then I won’t be given extra time to understand what the questions are asking me."

Since the start of the Covid-19 pandemic, with traditional face-to-face interviews on hold, hiring managers have had to re-consider how to efficiently assess candidates. The solution for many companies is asynchronous video interviews, or AVIs, in which candidates record themselves answering a predetermined set of questions with no human interviewer present.

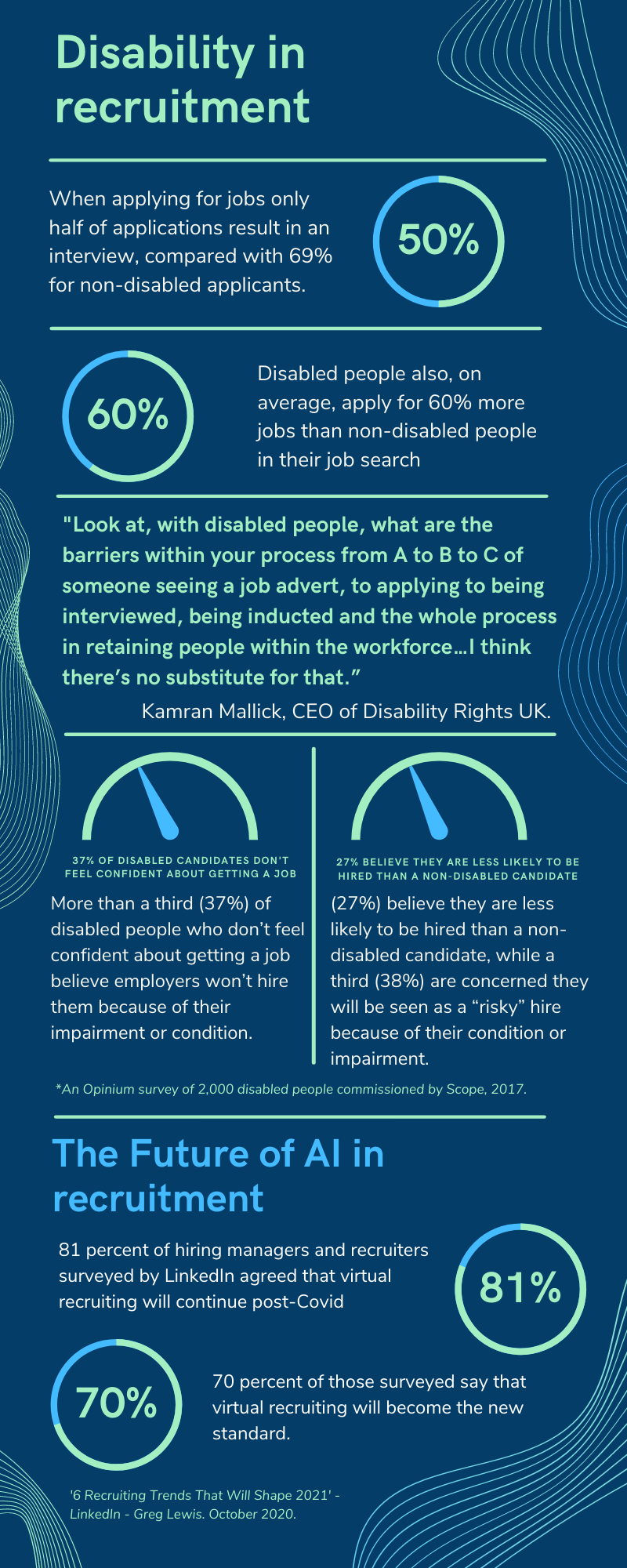

Although restrictions have now been lifted, many recruiters are still choosing to use the format to sort through stacks of applications. Last year, 81 per cent of hiring managers and recruiters surveyed by LinkedIn agreed that virtual recruiting will continue post-Covid and 70 per cent of those surveyed say that virtual recruiting will become the new standard.

In fact, currently, about 40 per cent of large companies use artificial intelligence (AI) when screening potential candidates, according to a global report by business consultancy Accenture.

In most cases, these recordings are assessed using artificial intelligence software. One of these companies is HireVue, which is used by 100 high-profile companies such as Unilever, Goldman Sachs and PwC, uses AI-powered software to analyse the language used in video recorded by candidates. It then assesses the candidate’s suitability for a job by scoring them on a range of job-related competencies such as team orientation, adaptability, or willingness to learn.

Overview of the HireVue platform. Credit: HireVue

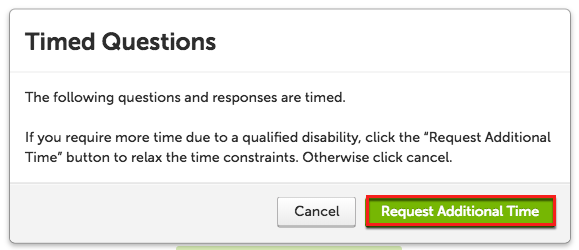

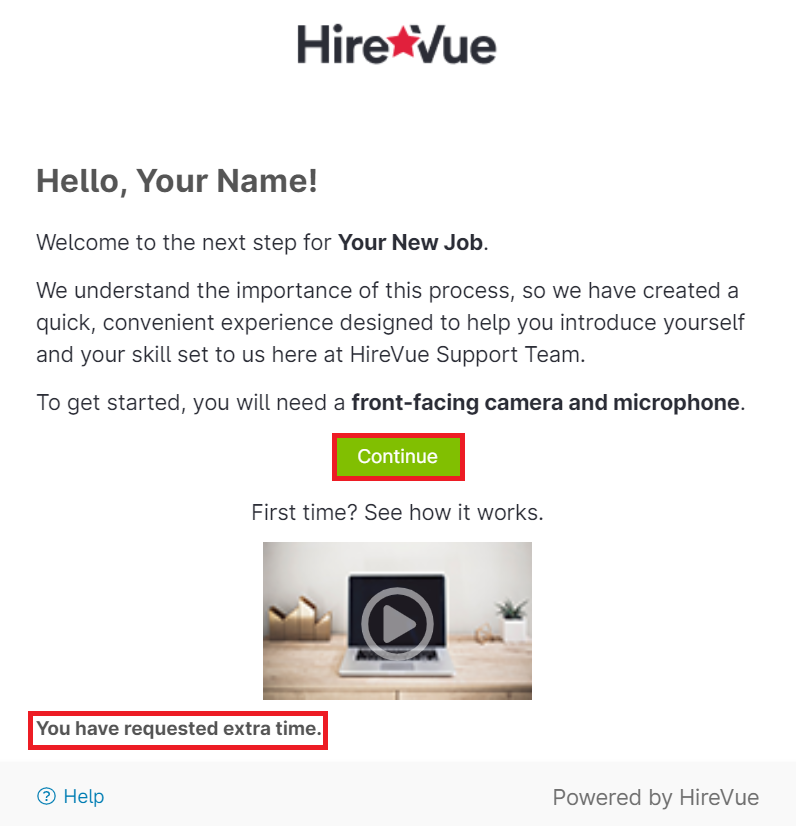

HireVue - Steps to request accommodations e.g. for disabled candidates. Credit: HireVue

HireVue - Steps to request accommodations e.g. for disabled candidates. Credit: HireVue

One of the accommodations is for extra time, which could help candidates with conditions such as Dyslexia. Credit: HireVue

One of the accommodations is for extra time, which could help candidates with conditions such as Dyslexia. Credit: HireVue

Until January last year, HireVue’s software used facial analysis to examine candidate’s micro-expressions to discern characteristics such as their perceived “enthusiasm.” This controversial feature was axed last year following public outcry over its potential to discriminate against people with disabilities, such as those with atypical speech patterns.

As shown in the Twitter thread below, a complaint filed by EPIC to the Federal Trade Commission in November 2019 cited HireVue's use of facial recognition to collect data from job candidates to evaluate their expressions of emotion and personality to evaluate their "psychological traits," "emotional intelligence," and "social aptitudes."

Yesterday, HireVue announced that it will stop using its facial expression analysis feature, a key part of its #artificialintelligence video interview tool – a big win for job applicants with disabilities.#DisabilityRights#DisabilityJustice https://t.co/jO8t6iD3T8

— Center for Democracy & Technology (@CenDemTech) January 13, 2021

“Based on the company’s research on the role of linguistic vs visual features’ in evaluating job candidates, and seeing significant improvements in natural language processing to date, HireVue made the decision to remove visual analysis from its new assessment models,” explains a HireVue spokesperson. “For similar reasons, speech features (like tone-of-voice) are no longer included in our models. The algorithms we are currently releasing are based on language only.”

These platforms can sometimes exclude candidates in ways that may not be obvious to a potential employer, though.

Innate Biases

AI-based platforms such as HireVue are often trained on data collected about previous applicants or current staff to predict the likelihood that a candidate will succeed in a position. Prof Peter Bentley, an Honorary professor of Computer science at University College London (UCL), said: “[The AI-software is] trained on standard datasets that represent ‘good candidates’ and ‘less suitable candidates’,” he said. “They may be based on a knowledge-based system comprising of an algorithm or set of rules that define whether a candidate is suitable or not.” “If the rules do not include relevant options for disabled candidates then the AI will be unlikely to classify them as a ‘good candidate’, and so they will be given low priority compared to those classified as such,” he added.

But experts argue that the data that trains the AI software to determine whether someone is a good candidate can introduce biases that can fail to include disabled people. “Biases are introduced into AI systems by the designers who develop them and can continually generate bias through inputs and updates during the system’s lifetime,” said Prof Selin Nugent, Assistant Director of Social Science Research at The Institute for Ethical Artificial Intelligence.

“Designing the [algorithm] involves deciding the parameters that define the system’s operation at what developers determine is an optimal level,” she added. “Defining such parameters without acknowledging their social implications potentially further disadvantages people of different ethnicity, gender, ability and economic backgrounds who already regularly struggle to be equally represented.”

As Kamran Mallick, CEO of Disability Rights UK, a pan-disability charity that aims to create a society where disabled people have equal rights and equality of opportunity, said: “one of the biggest problems with any AI system is that the people creating it, and creating the data, don’t include disabled people. I imagine there are similar parallels with Black and Asian people. If you don’t fit the normality of a white workforce, for example.”

As Prof Bentley explains: “[These groups] would be classed as statistical outliers and so less likely to be considered by the algorithms or human software developers (even if those outliers have exceptional talent).” But even if the algorithm could account for outliers, disability is not homogenous and so the way it presents itself varies widely from person to person. For example, one candidate with Autism could have more developed language and communication skills compared to another.

Kamran Mallick, CEO of Disability Rights UK, on the 'normal' model.

Kamran Mallick, CEO of Disability Rights UK, on the 'normal' model.

HireVue acknowledge that each person’s experience with disability is different, and are working to consistently update their AI system to produce outcomes that account for a broad range of characteristics presented in those with a disability. “The variety in how disability is presented among candidates makes this a challenge that all candidate evaluation procedures face. We have designed our technology around current best practices in the industry and are consistently working to learn and improve as new research becomes available,” says Lindsey Zuloaga, Chief Data Scientist at HireVue.

“Novel assessment formats such as video-based and game-based assessments may widen access for certain populations, but they also bring with them unique challenges in ensuring inclusion and fairness for those with disabilities.” “At the assessment and selection stage, psychometric assessments can be adjusted to meet the specific needs of an applicant, providing an opportunity for applicants to raise any special needs they may have in relation to taking the assessment,” Zuloaga adds.

The use of AI has been heavily criticised within the recruitment process. In a study published in September 2019, the Ada Lovelace Institute, an independent organisation set up to ensure that data and AI work for people and society, in combination with YouGov, found that 76 per cent of 4,109 people surveyed were uncomfortable with the use of AI in recruitment as they feared that it would not be used ethically by companies.

“The appeal of using AI in HR is that it can automate tedious, repetitive tasks so that HR professionals can focus on more strategic and complex tasks. This saves time and money in operations,” says Prof Selin Nugent, Assistant Director of Social Science Research at The Institute for Ethical Artificial Intelligence. “AI systems are known to quite efficiently reproduce and perpetuate deeper structural inequalities, a dangerous quality when making employment decisions.”

She adds that: “Transparency around the pitfalls and shortcomings of these systems are less frequently communicated and HR professionals are not afforded the tools to critically evaluate whether outcomes meet their expectations and responsibility to promote fairness. This is an unsustainable situation.”

A global report of risk managers by business consultancy Accenture found that 58 per cent identify AI as the biggest potential cause of unintended consequences over the next two years, but only 11 per cent in that study described themselves as fully capable of assessing the risks associated with adopting AI organisation-wide.

And LinkedIn’s 2021 recruitment trends report says that hiring managers will not only be responsible for delivering a diverse pipeline of candidates but will also be held accountable for moving those candidates through the recruitment process. Hiring processes are expected to be restructured to reduce bias, from building diverse interview panels to mandating data-driven reporting against diversity goals.

“Having input into your recruitment processes by people with lived experiences of your recruitment process. If you don’t have that, then find disabled people who can advise you. Value them and their time and expertise by treating them as you would treat any other consultant – you’re buying their expertise - to bring people in,” explains Kamran Mallick, CEO of Disability Rights UK.

“The lived experience of disabled people is equally valuable to you in order to make sure that your organisation reflects the community within which that it operates. Get disabled people to be part of the designing and recruitment process. Look at, with disabled people, what are the barriers within your process from A to B to C of someone seeing a job advert, to applying to being interviewed, being inducted and the whole process in retaining people within the workforce…I think there’s no substitute for that.”

A spokesperson from HireVue said: “Since 2019 we’ve worked closely with our partners Integrate Autism Employment Advisors, a non-profit organisation whose mission is to help companies identify, recruit, and retain qualified professionals on the autism spectrum. The experts at Integrate act as trusted advisors on product development to ensure that all of our products are designed with the needs of neurodiverse job seekers in mind. For instance, the question library in our newest product, HireVue Builder, was written in collaboration with our partners at Integrate to better meet the needs of neurodiverse candidates.”

"Integrate uses HireVue’s AI-powered assessment platform to train individuals on the autism spectrum to clear interviews." https://t.co/fJ0GI5RERd

— HireVue (@hirevue) October 1, 2019

“The partnership with Integrate has seen the deployment of HireVue’s virtual interviewing and assessment technology to evaluate and coach hundreds of neurodivergent candidates in their network. The partnership also includes an internship program in-house at HireVue to attract and retain top talent who are neurodivergent. We’ve also worked with the Integrate team to create candidate and employer best practices documents for hiring neurodivergent candidates which can be found here: tips for candidates; tips for employers.”

“We remain committed to ensuring our platform is unbiased for individuals with a neurodiverse profile.”

HireVue says that it is committed to conducting regular internal and independent, external reviews on our technology and a recent “algorithmic audit” by O’Neil Risk Consulting & Algorithmic Auditing (ORCAA), concluded that:“The HireVue assessments work as advertised with regard to fairness and bias issues; ORCAA did not find any operational risks with respect to clients using them.”

Image Credits 📷

Man at laptop - Lukas Blaskevicius @lukas_blass via Unsplash.

Woman and client - Marcus Aurelius via Pexels.

Data with handshake - Pressmaster via Pexels.

AI font - Aneesh via Fontspace

Video Credits 📹

Multiple clips - HireVue

Codes - Mikhail Nilov via Pexels.

Handshake - fauxels via Pexels.

Woman in wheelchair - Marcus Aurelius via Pexels.

Woman with laptop - Marcus Aurelius via Pexels.

CV - cottonbro via Pexels.

Music - Positive Motivation by https://www.purple-planet.com