What's happening with mis and disinformation?

Are there solutions on the horizon?

Did you know that eating an orange in a shower can reduce anxiety? What about those videos of the US Marines entering Los Angeles on June 8th to quell the immigration riots -- did you see them? Oh and good news DIY brand, B&Q is giving away free garden soil!

Here's the problem: If you were online, you probably saw all of these. Yet, they all were false.

Infographic by Dianna Bautista, made in Canva

Infographic by Dianna Bautista, made in Canva

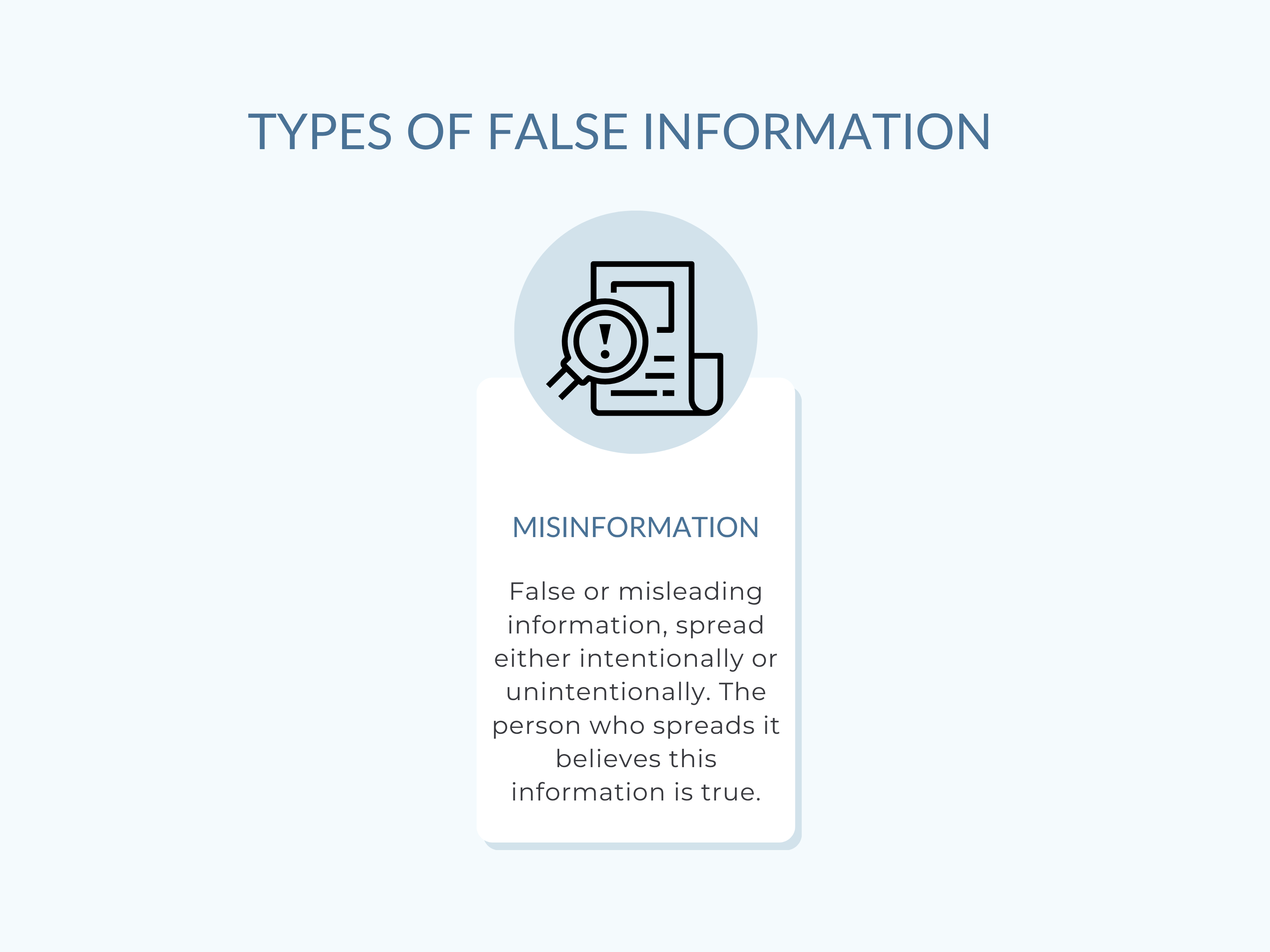

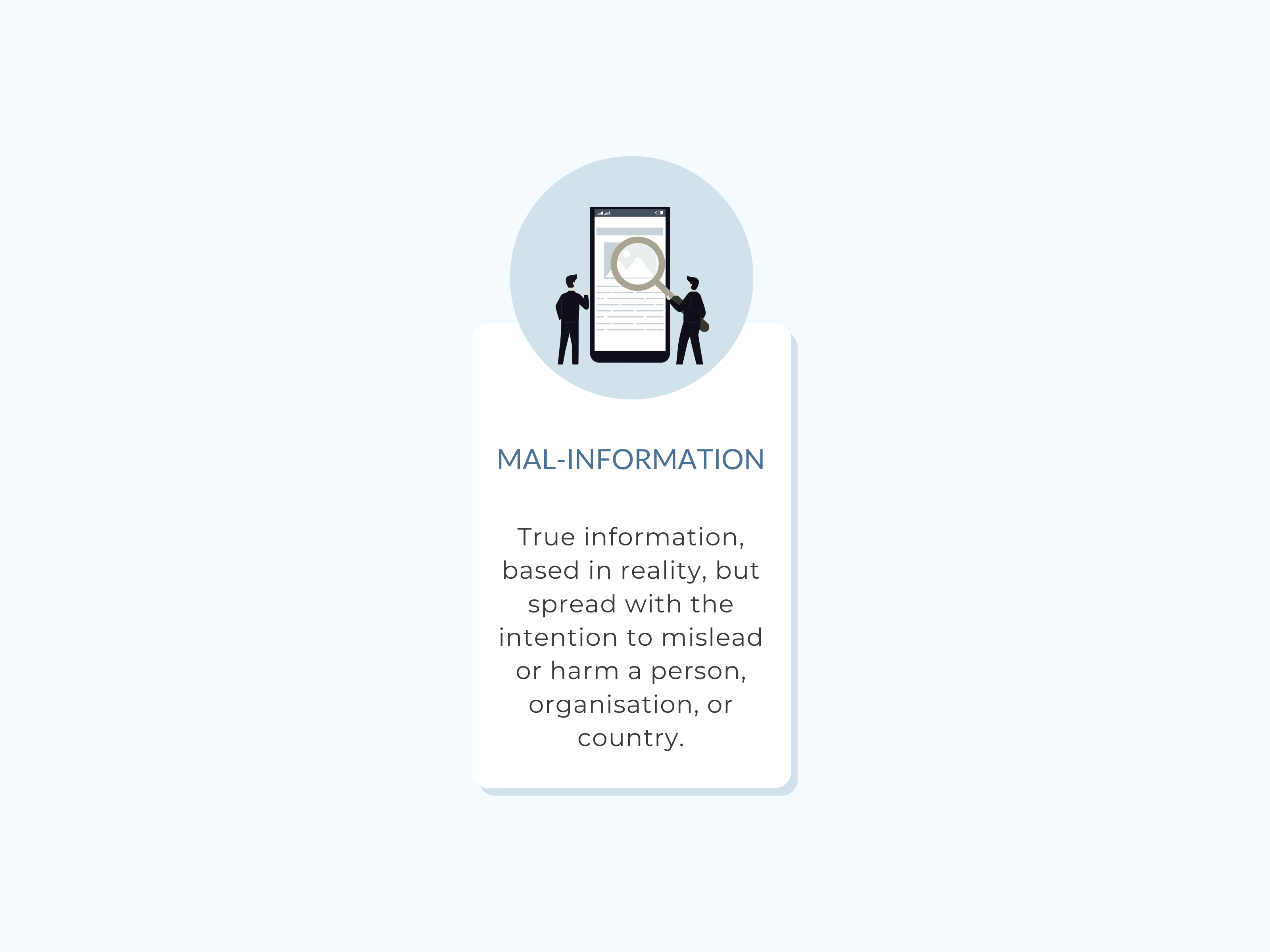

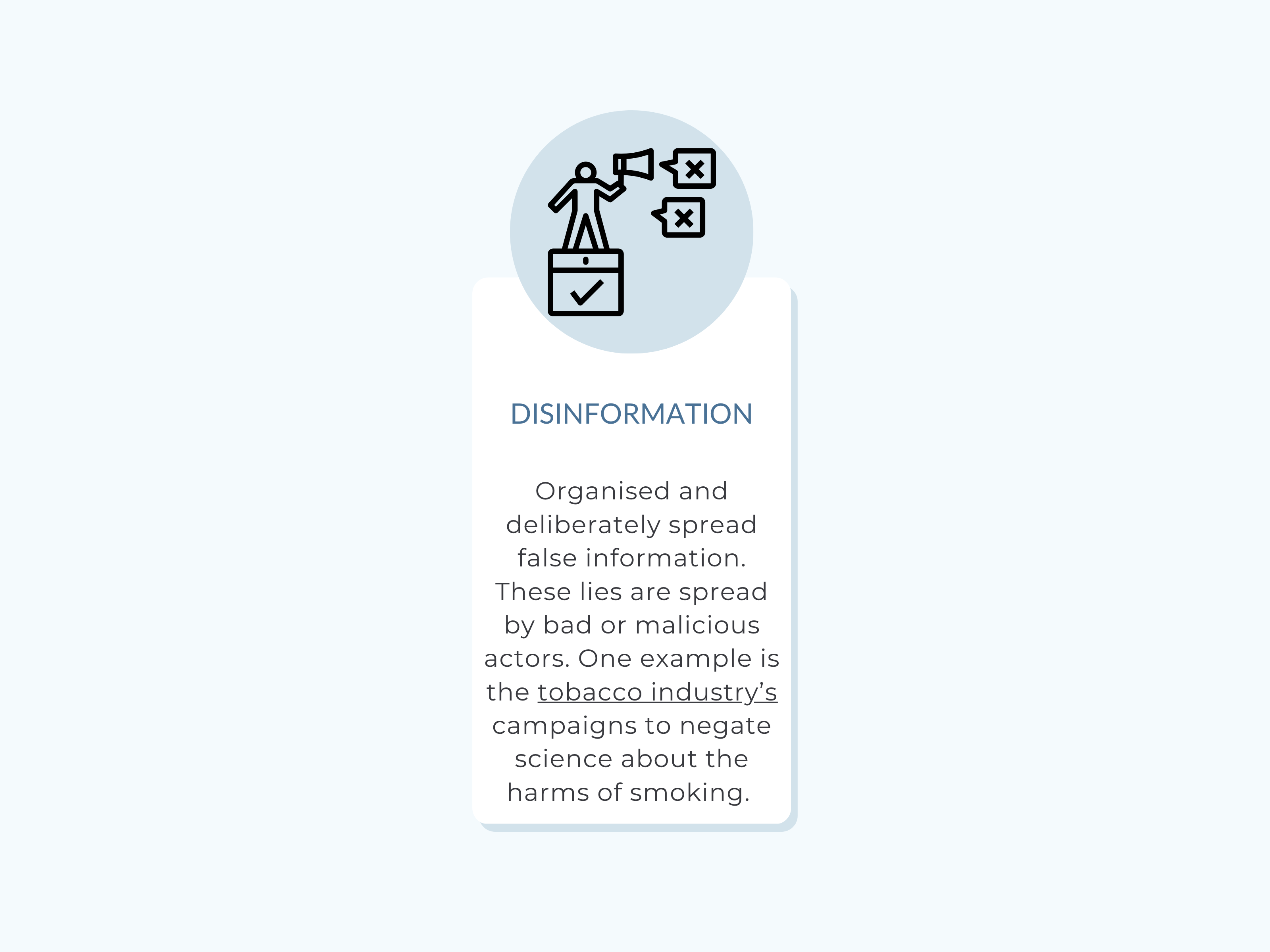

Mis and disinformation case studies range from false trends, started innocuously, to completely inaccurate lies and fake news, purposely spread by bad actors.

As Dr. Jon Roozenbeek, a lecturer in psychology and security at Kings College London explains, the different types of mis and disinformation can have an impact varying from small to extreme.

While examples such as the B&Q discount can be considered relatively harmless, other examples, such as the Marines video, which actually was dated from June 4, before the protests began, have a greater effect.

What's becoming increasingly clear is that misinformation a growing issue in today's world. So what are the factors affecting the spread and what are potential solutions to combat it?

Misinformation is a global problem.

May 30, 2025: A Guardian Investigation found that over half of the top 100 TikToks in the mental health space contain misinformation.

With people increasingly turning to social media for mental health, MPs and psychologists are expressing concern for this finding.

Examples, which occur under the hashtag #mentalhealthtips, include eating an orange in a shower to reduce anxiety, promoting supplements such as holy basil as a treatment for anxiety, or claiming that regular emotions are signs of a serious mental health issue.

The experts divide the misinformation into four categories:

1) Misuse of therapeutic terms

2) Pathologising normal emotions

3) Anecdotal evidence

4) Unevidenced treatments/false claims.

The Online Safety Act by the UK Government aims to tackle this issue.

April 21, 2020: US President Donald Trump suggested that ingesting bleach and sunlight can cure Covid-19.

Although he would later semi-retract his words, the damage was already done.

The backstory, according to John Verrico, the Chief of Media and Community Relations at the United States Department of Homeland Security's Science and Technology Directorate (DHS S&T) for 15 years before retiring in January 2024, involved preliminary research by DHS S&T's National Biodefense Analysis and Countermeasures Center (NBACC). *

The NBACC researches aspects such as how long diseases last on surfaces and how to clean up said surfaces to prevent transmission. During the Covid pandemic they studied how the disease cells would survive on different surfaces and conditions. They found preliminary evidence that higher sunlight, humidity and heat helped the Covid-19 cells break down on metal and plastic surfaces. They also had early data showing that bleach could work as a Covid-19 disinfectant on such surfaces.

Verrico said: "Practical recommendations that could come from this could be, for example, selecting a shopping cart that is outside in the parking lot at the grocery store, rather than ones that are inside the air-conditioned facility."

The team published their findings in The Journal of Infectious Disease.

Although the results were still preliminary, and not yet verified by other external scientists, the scientists were confident in them, according to Verrico.

He described how the scientists took the findings up the ladder to the S&T Under Secretary who then provided a progress report to the DHS Secretary, who then reported it to the COVID Task Force.

Despite hesitance from the NBACC scientists, due to lack of verification and support from other studies, to bring their findings to the White House, Verrico alleges the task force did just that. Next, according to Verrico, the White House, although being made aware by the scientists that this was preliminary data, immediately called a press conference.

He said: "We wanted the scientific community validate it before we went any further. But politics got in the way and said, 'No, no, we need to get this out faster.' And obviously, when you have a pandemic going on, you do want to take whatever positive action as soon as possible, but you don't want to have a knee jerk reaction and do things the wrong way. That's what our concern was."

At the press conference, the S&T Undersecretary, Bill Bryan, tentatively described the early findings.

However, when President Trump's speech followed, he made his infamous ingest bleach and sunshine suggestion.

Verrico recalled the aftermath, having to dispel the misinformation for months. The DHS S&T scientists had to do media interview after media interview. At the same time, external studies were validating their initial research.

While noting that elected officials are not experts in health, and therefore could misinterpret the results, Verrico said: "The potential deadly implications of people blindly following these suggestions are staggering, especially when you consider this was around the same time that people were digesting laundry detergent pods."

May 7-10, 2025: Misinformation and disinformation proliferated at an incredible speed during the India-Pakistan conflict. Old videos, AI, and video game footage were spread on social media as real-time footage. The false information made it to the TV media in India, exasperating the problem.

See the full case study below

A case study in misinformation and disinformation: Truth is lost in the fog of war

India and Pakistan have a history of tension dating back to their separation in 1947.

One key area of dispute is that both nations claim Kashmir. The most recent development in this history involved false information spreading alongside physical conflict.

April 22: 26 tourists including 25 Indian-nationals and 1 Nepalese-national are killed by militants in Pahalgam, Indian-administered Kashmir. It is the deadliest terrorist attack in an Indian territory since 2008 Mumbai. India blamed Pakistan saying they backed the armed group. They arrest two Pakistan nationals. Pakistan claimed they had no role in the attack.

Immediately afterwards, the Indian government blocked 16 Paksitani Youtube channels, including Dawn, an established news outlet.

April 28: It is the fourth night of the two countries exchanging gunfire at the de-facto border separating the Indian and Pakistani disputed-area of Kashmir. India said it has responded to “unprovoked small arms fire” from Pakistan.

May 6-7: India launched Operation Sindoor, as a response to the April 22 militant attacks. Just after midnight they attacked "terrorist-infrastructure" sites in Pakistan and the Pakistan-administrated areas of Kashmir. Pakistan disputed this, saying civilians were also targeted.

Over the course of the night, Pakistan said the air strikes attacked six locations. According to the BBC, Pakistan claimed that 26 people were killed and 46 were wounded from the strikes and shelling. Live reporting from AlJazeera said that India claims they actually launched attacks at nine sites and at least 10 civilians were killed due to cross-border activity by Pakistan.

During the conflict Pakistan claimed to have shot down five Indian fighter jets. India did not confirm nor refute this claim.

At the same time, online misinformation and disinformation was running rampant. Accounts from both countries share false information which eventually ends up on TV screens in India.

May 8: India requested that X suspend over 8,000 accounts in India. These include Pakistani celebrities, politicians, and media organizations. X reluctantly agrees.

A truce was called on May 10...

Despite this, both sides still claim the other country violated the truce in the first 24 hours.

Uzair Rizvi, the head of media literacy at Logically Facts until this May, was checking his X feed one last time on May 6 night when he first saw a post saying that India has attacked Pakistan. The India-based fact-checker then described a five hour window of information flooding in during the early morning of May 7. As he quickly found out during his fact-check of the videos, posts and other information associated with the conflict, misinformation from both India and Pakistan was spreading quickly on the platform.

Rizvi said: "There was so much of vacuum because the news, or the government sources, was not updating it in real time. Obviously since it was happening late in the night, you couldn't have any footage. You didn't have any real time news which was coming in because the attack was happening on the border."

Checking both Indian and Pakistani accounts, he saw false information from both countries. Indian accounts were saying that India had downed Pakistan fighter jets. Pakistani accounts were sharing old images, claiming that they were evidence of the Pakistan Air Force downing Indian fighter jets.

He explained: "The speculation started coming in. For example people were sharing old images or videos saying that this could be a fighter jet, which could have been down by Pakistan Air Force."

Eventually, the misinformation jumped from social media to the mainstream TV media, giving the false information a second life.

"In these sort of breaking news situations where there's real time news and so much of information, there's also a vacuum because of conflict. When there is a fog of war happening, you don't get updates from the government or like, because it's happening late in the night"

- Uzair Rizvi, Former Head of Media Literacy and Fact-Checker at Logically Facts

The Center for Study of Organized Hate (CSOH) in the United States analyzed 1,200 posts from May 7 and across social media platforms, X, Facebook, Instagram and YouTube. As stated on their website, their report revealed that the "information did not simply circulate, it metastasized."

The report noted the "staggering" volume and speed of misinformation and disinformation. It took the form of fake or unrelated and old videos, AI generated videos of political leaders, and even a screenshot of a non-existent news article.

Throughout the night, Rizvi saw videos from Gaza and Ukraine being presented as part of the current conflict. Even video game clips were being cited as real-time footage. Using reverse image searches and other verification techniques he as was able to identify the correct origin of the videos. At the same time fact-checkers across the globe were also checking this information.

However, the videos were also being shared by influencers and verified journalists on both sides. Speaking to his experience in India, Rizvi described how the videos were picked up by the Indian TV media.

He said: "If we compare it with both India and Pakistan, because I was following both the medias and journalists from both the sides, Indian media had done far worse than what the Pakistani counterparts did in sharing misinformation."

The misinformation would flare up again couple days later on May 9, with Rizvi explaining how Indian media began to make further claims.

He said: "The Indian media started claiming all sorts of things like they said that the Indian Army, has actually captured some of the cities in Pakistan. Or they were claims that the Indian naval carrier has destroyed the port of the city of Karachi in Pakistan."

This sparked panic among those living in Karachi who feared imminent attack. Fact-checkers were able to verify that the video of claiming to be an attack at Karachi was actually an unrelated Indian naval clip from 2023.

Once the panic faded, they responded with "memes" to show their safety said Nighat Dad, founder of the Digital Rights Foundation (DRF), a Pakistan-based NGO, in a ABC Australia news article.

A ceasefire was declared on May 10.

The CSOH report concluded that misinformation and disinformation was "weaponized" during this conflict. They cite evidence from the Indian news outlet The News Minute, in collaboration with Mohammed Zubair, a fact-checker at Alt News, that shows disinformation being used strategically. This further intensified the situation and fed into real-world escalation, opinion, and narratives.

Worryingly, the report stated that this incident is part of "the broader global trend in hybrid warfare."

Screengrab from one of Logically Facts fact-check report of the events from May 7

Screengrab from one of Logically Facts fact-check report of the events from May 7

#Thread India launched an attack on several sites in Pakistan as retaliation for the killings of 26 civilians in Kashmir last month, Pakistan claims to be responding to these attacks. However, #misinformation is being shared on SM with old content#FactCheck #OperationSindoor

— Uzair Rizvi on Bluesky @rizviuzair.bsky.social (@RizviUzair) May 6, 2025

See Rizvi's real time fact-check of false videos on social media

Watch how fact-checkers used open source investigation techniques (OSINT) to verify videos taken 'during' the conflict

The need for media literacy

The role of the TV media in the India-Pakistan conflict was significant in magnifying and legitamising false information -- something both Rizvi and the CSOH report strongly emphasised.

Half of Indian online users get their news from television according to a Reuters Institute’s Digital News Report.

During the conflict, many news outlets wanted to be the first to report on what was happening.

Rizvi said: "Because there was no real time footage available, and they were picking up anything and everything, whatever they were seeing."

As he described, one issue at play was that many of these organisations had young journalists on social media watch, who have not received proper fact-check training during their education. This led them to be more likely to spread a non-verified video.

Aware that this has been an issue at Indian universities, Logically Facts and other fact-check agencies in India, have launched literacy initiatives at universities with media programs to combat this. These media literacy initiatives aim not only to teach students how to identify misinformation, but also how to verify it. In the past six months, Rizvi said he has trained more than 200 students, particularly media students.

However, he notes that media literacy should not just be limited to young journalists, but the entire Indian population.

He said: "Media literacy still has a very long way to go in terms of targeting everyone and equipping or arming everyone with a sense of how to use social media, especially on the verification front."

Are younger generations more suceptible to misinformation?

Roozenbeek's latest paper, a joint study by the University of Cambridge and University of British Columbia study, made an interesting finding: Compared to older generations, generation Z has the greatest difficulty discerning misinformation.

This result is a paradox to the idea that growing up in the digital age has given generation Z an advantage. It also contradicts the earlier theory that older individuals are more likely to believe and spread misinformation.

Roozenbeek said: "We're seeing this age effect that we're kind of struggling to explain."

The study included participants from multiple ages across 24 countries, but as Roozenbeek explained, they found the same results in different contexts.

He said: "So basically, what you're finding is that Gen Z are very, very, very good at using digital tools, but not particularly good at kind of investigating what's true or false, or having a good kind of view on what constitutes a reliable source, or being able to tell which type of persuasion technique is used in a news headline."

He thinks one possible reason has to do with age. Generation Z simply has not been exposed to as much news as older individuals. They haven't yet experienced the learning effect that comes with this exposure. However, this is just an early theory .

"Being digitally fluent and knowing how to use certain platforms is not the same as being digitally literate. Young people in these age groups can struggle to distinguish between trusted sources and manipulative content, particularly when it’s a part of meme culture or influencer advice."

- Katherine Howard is the Head of Education and Wellbeing at Smoothwall

Katherine Howard is the Head of Education and Wellbeing at Smoothwall, a digital safeguarding firm that works with UK in schools on online safety and preventing disinformation. The firm monitors harmful content and gives support and advice to teachers and parents to help keep young people safe online. Working at the frontlines, Howard notes a similar observation to Roozenbeek's study: Despite growing up online, we cannot assume that generation Z and generation Alpha are better equipped to navigate the misinformation.

This ability becomes particularly difficult when it is part of meme culture or comes from an admired or trusted influencer.

She explains that young people are seeking community, a sense of belonging, and connections. Many of them meet others online, whom they consider close friends.

She said: "These qualities naturally make them curious and willing to learn – but it also makes them vulnerable targets."

"Generally, misinformation becomes believable when it comes from someone they admire or trust, whether this is one of the friends they have made online or an influencer they follow closely - or if content is being shared cross-platform, such as TikTok content also being shared on Instagram."

Another issue is that mis and disinformation targeted to children and young people does not always look like the traditional fake news headlines.

She said: "It’s tailored specifically to these age groups and is embedded in everyday digital experiences."

"We see misinformation spread through viral challenges that encourage young people to participate, or conspiracy-style videos that claim parents, teachers, and the overall 'system' are “hiding the truth”.

While the content isn’t always overt or political, it can be emotional, trendy, or rebellious. And over time, it can fuels distrust in adult guidance and authority.

Howard emphasised that media and digital literacy is not only key, but an essential life skill, given the amount of time young people spend in these spaces. However, she adds that the onus need not only be on the children, but schools, teachers, and parents.

What are the solutions?

For all the experts, there is agreement that media and digital literacy is necessary for all ages. All three cited the growing presence of AI, with Howard and Roozenbeek both mentioning this as another literacy factor to teach.

Howard said: "Information spreads faster than ever and the development of AI is outpacing legislation to regulate its use, so being able to critically assess what they are seeing online is absolutely crucial."

Both her and Roozenbeek, also emphasised that the responsibility and protection needs to not only be on the individual protection level but also on a larger scale.

She said: "Crucially, solutions and intervention need to be collaborative. No single group can tackle the disinformation crisis alone, and young people need a consistent safety net that spans both their online and offline worlds, and their school and home lives."

For Roozenbeek, interventions are a multi-layered defence system which includes tweaks to our online content and regulations that would dissuade powerful forces from using lies and disinformation to obtain their goals.

He explains: "I think if we are seeing this conflagration of incentives on the part of, for example, certain political parties in Europe, but also on the part of certain governments, like this kind of collusion, if you will, in terms of strategic goals being achieved through disinformation. If we're seeing that collusion happening, then, then it's probably a good idea to to have very strict rules about that."

Whether it be about eating an orange in the shower or active disinformation campaigns, the need for these interventions is even more imperative in today's world.

*The NBACC has been contacted for a right of reply.